The following is a live blog from State Of Search. Please pardon any typos or grammar issues as we really didn’t have time for much proofing between sessions.

Rand starts by talking about how difficult it is to reverse engineer the algorithm now. Once upon a time it was humans creating algorithms and not overly sophisticated ones which thus got abused. Will Reynolds wrote a great piece on making liars out of good SEOs that was accurate in 2012.

But then it changed …

They are now looking at language and predicting what you want and what you mean. This includes timing. Phrases related to marketing for example tend to produce current results.

Factors like exact match still work but not like they used to.

Google has gotten very good with entities and understanding the intent. He references a chat with Bill Slawski about brands being entities. They get markup and he suggests that might influence clickthroughs.

These enhancement brought Google mainly back in line with their public statements. They are based on internal philosophical changes. Early they didn’t like machine learning and Amit Singhal had a bias. In 2012 Google published a paper on using machine learning to predict ad clickthrough rates. So then we knew they were using it on the paid side.

In 2013 Matt Cutts at Pubcon discussed machine learning in organic rankings. Fortunately they are public about how they’re using it in Image Recognition & Classification. They take potential ID factors (colors, shapes, etc.) and couple that with training data (get humans to label images). With this the machine is then trained to “recognize” items, location, etc and they do it very well.

He goes on to discuss how machine learning could work in search

- take all potential ranking factors

- add manual good and bad results

- teach the machine to learn how to adjust factors to improve odds of a query producing good results

Deep learning is even more advanced ..

With deep learning they don’t’ have to teach the system what a cat is, they feed it data and a way to learn – the system then learns to recognize entities and can thus begin to pursue its own algorithm. Many scholars are concerned about what this means long term for mankind.

Unfortunately this creates a scenario where no humans know what ranks a site. Success metrics will be the only metric to the machine. Ex –

- Long click ratios (time from leaving Google to the return)

- Relative CTR vs other results

- Rate of searches conducting additional, related searches

- Sharing and amplification rate

- User engagement on domain/URL

This is a very different world to optimize in. The optimization will be for users. Traditional factors (title tags, anchors, etc) may well be used but they won’t be the success metrics a machine uses to determine if a result is good.

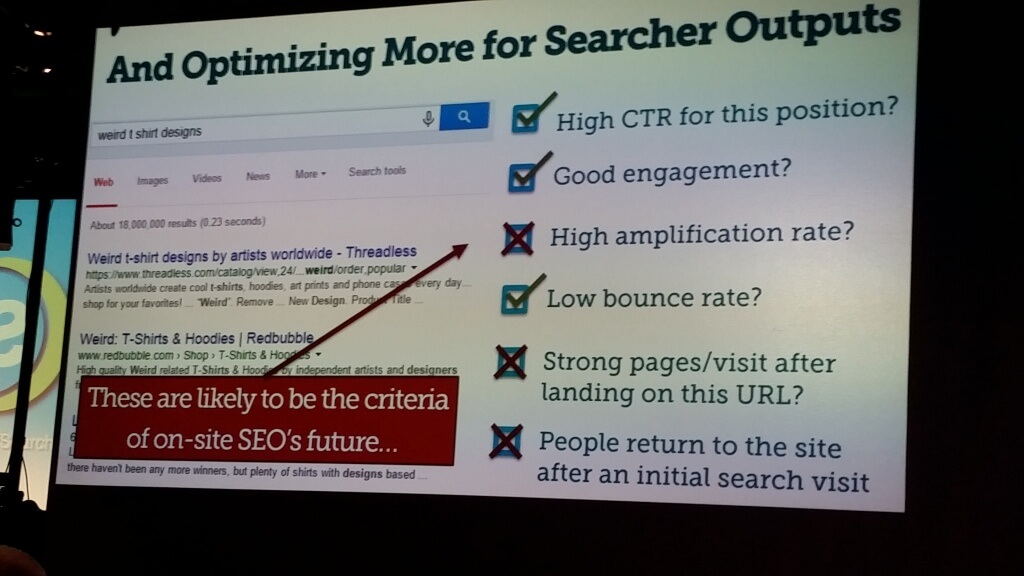

He shows the following slide …

Rand references queries and click tests where he helps push a site up with just clicks. It’s gotten harder to do that since 2014. At SMX Advanced Gary Illyes noted, “Using clicks directly in ranking would not make too much sense with that noise.”

With that he tried something new (long click vs short click). He did that with Bobby Flay who was #1 for the phrase he targeted in the test and had people short-click on Booby’s result and then long-click on Serious Eats – 40 minutes later Serious Eats was #1 and after 70 minutes and 500 interactions it was #1. It then fell to page to and then rose back to #4 where it started.

On October 26, 2015 Google discusses turning over search systems to AI. Rand stresses that this is happening NOW. He is referring to RankBrain which we discussed in our blog here.

In this world we have to choose: the old signals or the new …

Classic:

- Keywords,

- Quality and uniqueness

- Crawl/bot friendly

- Snippet optimization

- UX/Multi device

- Links and anchors

New:

- CTR

- Short v long click

- Content gap fulfillment

- Task competeion

- Amplification

- Brand search and loyalty

5 Modern Elements Of SEO

- Punching above the average CTR for a position. This makes the display of a search result increasingly important. (My note – since the traffic is the key to begin with it really should have always been the first thing we look at)

Google frequently tests a new result on page one. If you publish something that doesn’t perform well it may be better to completely republish on the topic than tweak. - Beating out your fellow search results pages on engagement. Pogo-sticking and long clicks can determine your ranking and more importantly, how long you rank. This makes factors like speed, answering questions users didn’t know they had until they read your content, best UX, etc.

- Filling gaps in your visitor’s knowledge. Google will want to know that the page fulfills all searchers needs. For example, content about New York would include word like Brooklyn. Moz is working on something to help with this but in the meantime Alchemy API and MonkeyLearn help with this for now (Eric)

- Fulfilling a searcher’s need – Google wants searchers accomplishing their tasks faster. The ultimate goal is to take you from the broad search right through to the end of your task. For example, if they see people searching a variety of terms around instant Ramen noodles they will find a site like “TheRamenRater” which now ranks because the users ended their queries there.

- Earning more shares, links and loyalty per visit. Pages that get lots of social activity and engagement but few links seem to over-perform despite Google stating it’s not a factor. Rand believes Google here. Moz worked with Buzzsumo and found the distribution of shares and links have little correlation and that most content doesn’t get shares. Raw share data matters not because it doesn’t necessarily indicate engagement (user shared but didn’t read for example). Moz is testing whether shares with engagement impacts results but in the end Rand wisely points out that it almost doesn’t matter. Shares and engagemenet should be their own goal. So it’s not to need “better content”. What we need is 10x content (content that is ten times better than the currently ranking content). The top 10% of content gets almost all the shares.

We are in a 2 Algorithm Future

- Users

He calls the “make pages for people not engines” bad advice. We need to do both.

The presentation can be found at bit.ly/twoalgo